In the rapidly evolving world of computing, the need for speed and efficiency is more critical than ever. As industries increasingly rely on data-intensive applications, the role of Graphics Processing Units (GPUs) has surged. One of the most powerful tools to harness the capabilities of GPUs is CUDA (Compute Unified Device Architecture), a parallel computing platform created by NVIDIA. This article delves into the essence of CUDA programming, providing insights into how to "read CUDA by example: an introduction to general-purpose GPU programming." By exploring practical examples and applications, readers can grasp the potential of CUDA in transforming computational processes.

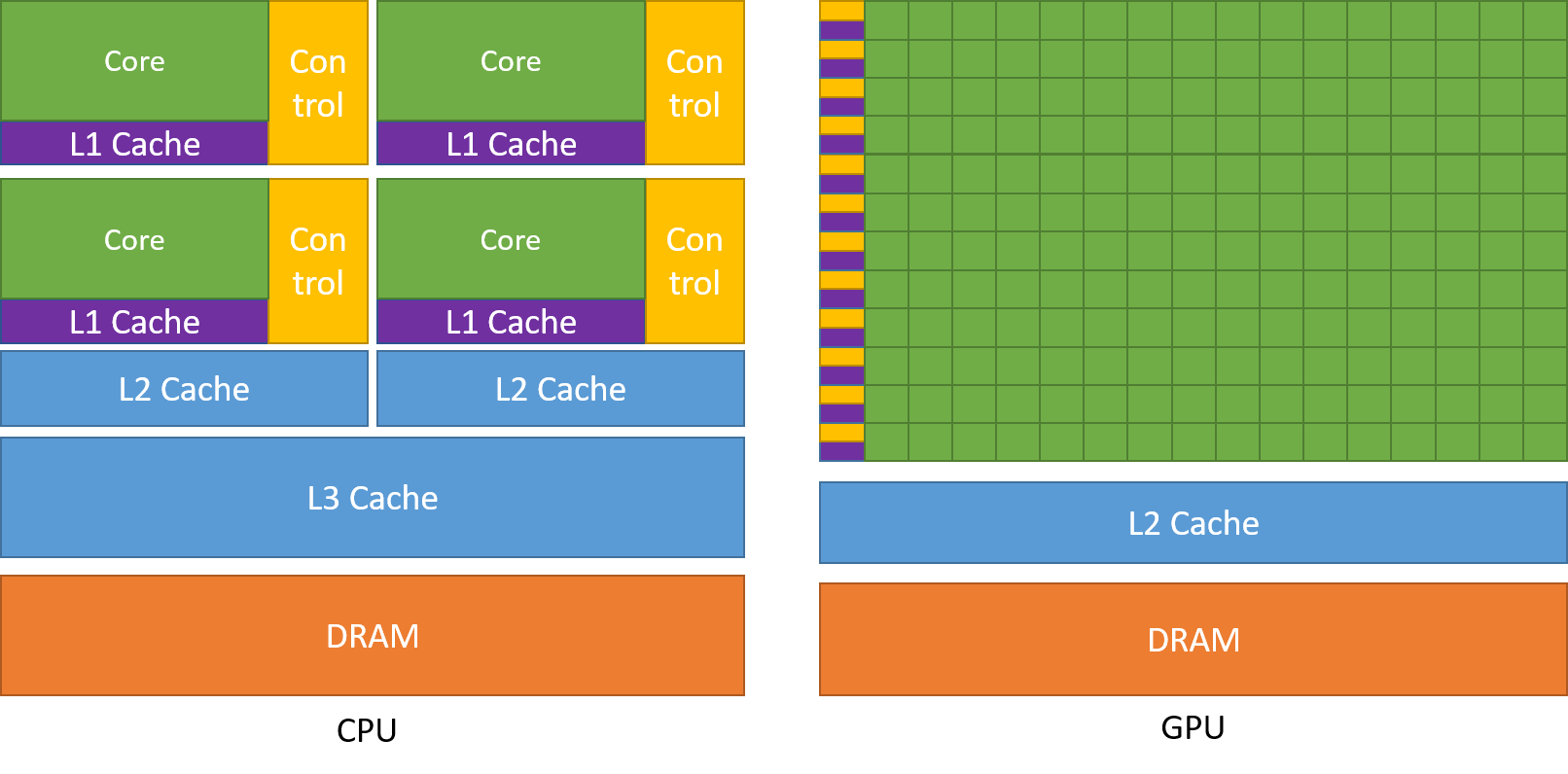

CUDA offers a unique programming model that allows developers to leverage the massive parallel processing power of GPUs for general-purpose computing tasks. Unlike traditional CPU architectures that execute tasks sequentially, GPUs can execute thousands of threads simultaneously. This capability is particularly beneficial for applications in machine learning, scientific simulations, and real-time data processing. As we journey through the world of CUDA, we will uncover its principles, syntax, and structure, equipping you with the knowledge to implement GPU programming in your projects.

As we explore the nuances of CUDA, we will address common questions and challenges that developers may face. Understanding the underlying architecture of GPUs and how to effectively write and optimize CUDA programs is crucial for anyone looking to take advantage of this technology. In this article, we will provide clear explanations, practical examples, and tips for getting started with CUDA programming. Get ready to dive into the exciting world of parallel computing!

What is CUDA and Why is it Important?

CUDA is a parallel computing platform and application programming interface (API) model created by NVIDIA. It allows developers to use a C-like programming language to write software that can run on the GPU. This capability significantly enhances computational speed by allowing multiple calculations to be performed simultaneously. Here are some key points about CUDA:

- Designed to improve performance for highly parallel applications.

- Widely used in fields such as deep learning, scientific computing, and image processing.

- Provides a straightforward way to access GPU resources without needing to understand the complexities of hardware.

How Does CUDA Work?

CUDA operates on the principle of parallelism, allowing developers to break down complex tasks into smaller, manageable pieces. Each piece can be processed independently and simultaneously on the GPU. Understanding the architecture of CUDA is essential to fully leverage its capabilities.

What are the Key Components of CUDA?

CUDA programming consists of various components that work together to facilitate parallel processing:

- CUDA C/C++: The primary programming language used for developing CUDA applications.

- Kernels: Functions that run on the GPU and are executed by multiple threads in parallel.

- Threads: The smallest unit of execution in CUDA, where thousands can operate simultaneously.

- Blocks: Groups of threads that work together, allowing for synchronization and shared memory access.

- Grids: A collection of blocks that represent the entire dataset being processed.

How Do You Get Started with CUDA Programming?

Getting started with CUDA programming involves several steps:

- Install the CUDA Toolkit: Download and install the toolkit from the NVIDIA website to access the necessary tools and libraries.

- Set Up Your Development Environment: Use an Integrated Development Environment (IDE) like Visual Studio or Eclipse for coding.

- Write Your First Kernel: Start with a simple example to understand how kernels work and how to launch them on the GPU.

- Compile and Run Your Program: Use the nvcc compiler included in the toolkit to compile your CUDA code.

What are Common Pitfalls in CUDA Programming?

While CUDA programming opens up new possibilities, there are common pitfalls that developers should be aware of:

- Memory Management: Improper handling of memory can lead to performance bottlenecks or crashes.

- Overlapping Data Transfer: Failing to overlap data transfer between the host and device can hinder performance.

- Kernel Optimization: Not optimizing kernels can result in underutilization of GPU resources.

How Can You Optimize Your CUDA Code?

Optimizing CUDA code is essential for maximizing performance. Here are some strategies:

- Minimize Memory Transfers: Transfer data between the host and device as infrequently as possible.

- Use Shared Memory: Take advantage of shared memory to reduce access times.

- Optimize Thread Usage: Ensure that your kernels are designed to utilize as many threads as possible.

What Resources are Available for Learning CUDA?

Various resources can help you learn CUDA programming effectively:

- Books: "CUDA by Example: An Introduction to General-Purpose GPU Programming" is a highly recommended book for beginners.

- Online Courses: Websites like Coursera and Udacity offer courses specifically focused on CUDA programming.

- Tutorials and Documentation: The NVIDIA developer website provides extensive documentation and tutorials for practical learning.

What are the Applications of CUDA in Real-World Scenarios?

CUDA has numerous applications across various industries, including:

- Machine Learning: Accelerating the training of deep learning models.

- Scientific Research: Simulating complex physical phenomena in computational physics and chemistry.

- Image and Video Processing: Enhancing graphics and processing video streams in real-time.

Conclusion: Why You Should Explore CUDA Programming?

As we wrap up our exploration of CUDA programming, it’s clear that this powerful tool can significantly enhance computational efficiency and open new avenues for innovation. Whether you are a seasoned developer or just starting your journey, understanding how to "read CUDA by example: an introduction to general-purpose GPU programming" can empower you to tackle complex problems with ease. Embrace the world of parallel computing, and you will be well-equipped to meet the demands of modern data-driven applications.